arna.ml GPU Cloud Management Software enables you to offer a hyperscaler grade self-service on-demand IaaS+PaaS to your shared GPU pool with secure multi-tenancy.

Effortlessly manage and optimize your GPU cloud with aarna.ml

Users can order GPU resources using a variety of means: model serving, job scheduling, fine tuning, or GPU instances

Offer your tenants optimized model serving from Hugging Face, NIM, and private repository.

Empower tenants to submit jobs using KAI (open source Run:ai) with Jupyter Notebook integration.

Enable tenants to securely fine tune foundational models with their private data.

Provide access to hundreds of 3rd party PaaS and MLOps tools; customizable to suit your needs.

Automatically register unused GPUs with NVIDIA DGX Lepton™ Cloud.

We can convert remaining GPUs to a shared LLM or shared job submission endpoint with granular billing.

Dynamic multi tenancy with hard isolation, a powerful admin console, and NVIDIA compliant architecture--built for ultimate GPU optimization.

Scalable GPUaaS for AI Workloads: Multi-Tenancy, Optimization, and Automation.

Unified Multi-Tenancy Management – Automated tenant onboarding with optimal isolation strategies.

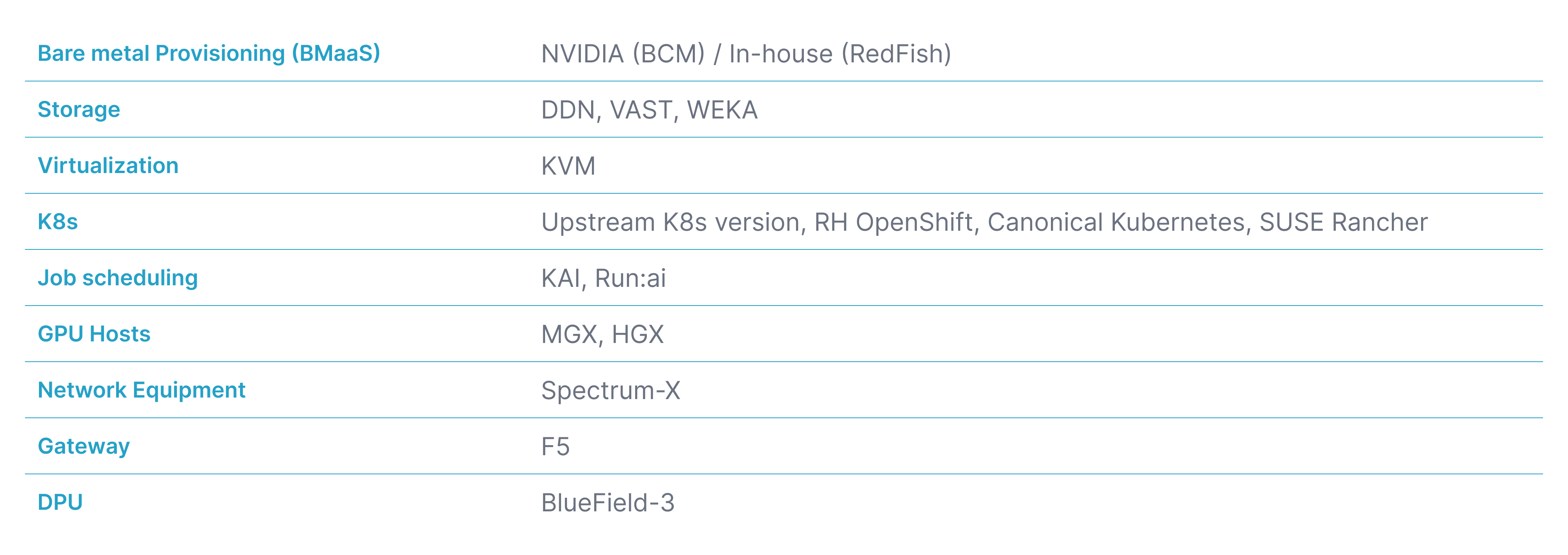

Flexible Service Offerings – Support for IaaS and PaaS, including bare-metal, VMs, Kubernetes, model serving, and job scheduling.

Enhanced Resource Utilization – Smart GPU orchestration for dynamic scaling and efficient workload allocation.

E2E Orchestration - Enable Scalable, Efficient Al Cloud Infrastructure, Platform and Applications.

We offer Premium or Basic Support depending on your particular needs or requirements *

Support hours 24 hours x 7 days OR 8 hours x 5 days

Committed Service Level Agreement (SLA)

Number of operational support incidents

Maintenance support included (access to new versions of software)

Designated support engineer

Community advocacy

Knowledge base

Technical bulletins

*

There is no limit on the number of the tickets as long as the requests are reasonable.

Schedule a demo for a tailored walkthrough.