aarna.ml GPU Cloud Management Software (CMS) Announces Network Automation and Multi-Tenancy for NVIDIA Spectrum-X

The latest aarna.ml GPU CMS announces network automation, observability, fault management, and multi-tenancy for the v1.3 NVIDIA Spectrum-X Reference Architecture (RA). The Reference Architecture (RA) defines an East-West compute network fabric optimized for AI cloud deployments with HGX systems and a North-South converged network for external access, storage, and control plane traffic. As part of this announcement, aarna.ml supports NVIDIA Spectrum-4 SN5000 Series Ethernet switches, NVIDIA Cumulus Linux, NVIDIA BlueField-3 SuperNICs and DPUs, NVIDIA NetQ AI observability and telemetry platform, and NVIDIA Air data center digital twin platform along with NVIDIA HGX H100/H200 nodes.

NVIDIA Cloud Partner (NCP) and enterprise AI clouds must provide hard isolation between tenants. The aarna.ml GPU CMS addresses this comprehensively across seven pillars: high-performance networking, InfiniBand fabrics, NVLink GPU interconnects, scalable storage, virtual private clouds (VPCs), compute resources, and GPUs. By enforcing isolation and performance in each pillar, we ensure tenants can run demanding AI workloads securely and without compromising the performance, on shared hardware. This blog focuses on the high-performance networking aspect.

Switch Fabric Automation with aarna.ml GPU CMS

A modern GPU data center switch fabric typically comprises multiple segmented networks—most notably the East-West (Compute) network and the North-South (Converged) network. The North-South network itself includes Inband management, Storage, External/Tenant access. These networks can be built on Ethernet or InfiniBand, depending on the performance and isolation requirements.

A single Scalable Unit (SU)—as defined by NVIDIA Spectrum-X Reference Architectures contains:

- 32 GPU nodes

- 12 switches

- 256 physical cable connections

This topology serves just 256 GPUs, highlighting the operational complexity and scale.Without automation, managing such fabrics—especially across multiple SUs—becomes impractical and error-prone. The aarna.ml CMS addresses this challenge by offering:

- Topology auto-discovery

- Underlay configuration

- Lifecycle automation for switch configurations

- Overlay network creation for tenant-level isolation

- Integration with compute orchestration pipelines

This enables scalable, intent-driven management of switch fabrics, ensuring that GPU resources remain performant, isolated, and easy to provision.

BlueField-3 SuperNIC Spectrum-X Configuration

The aarna.ml GPU CMS automates all the tasks needed to turn a BlueField-3 equipped server into a Spectrum-X host. When a new host is provisioned, the GPU CMS installs the DOCA Host Packages, enabling key services and libraries. It also brings up the DOCA Management Service Daemon (DMSD), which runs on the host and acts as the control point for the SuperNIC.

After bringing up DMSD, the GPU CMS controller uses it to configure the BlueField-3 SuperNIC with Spectrum-X capabilities like RoCE, congestion control, adaptive routing, and IP routing. This makes the host fully Spectrum-X aware — ready to participate in high-performance GPU-to-GPU networking, where the Multitenancy relies on BGP EVPN from Cumulus and Spectrum-X switches.

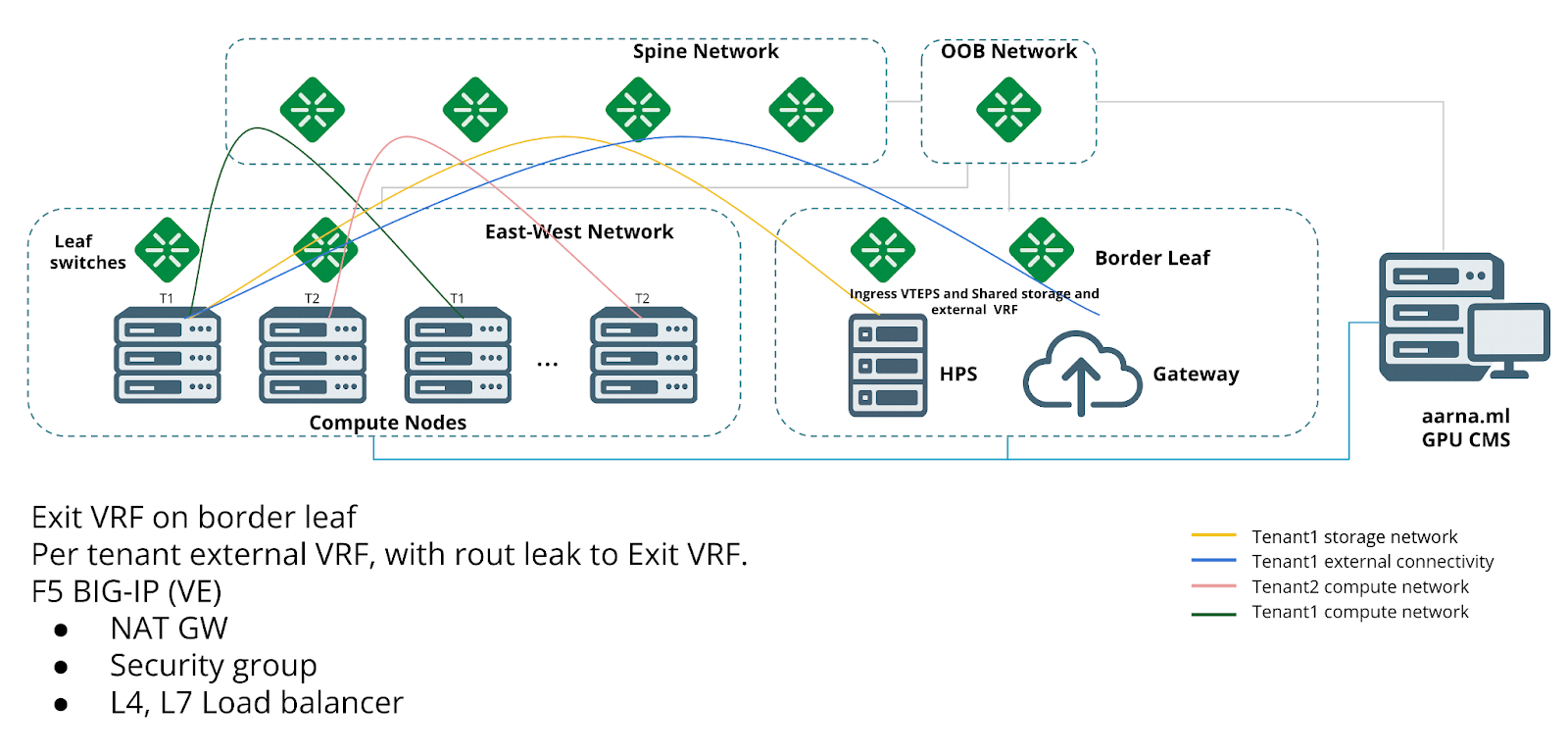

Network Multi-Tenancy

The above figure shows how aarna.ml GPU CMS creates tenant isolated overlay networks on the ethernet switch fabric using the VxLAN and VRF with BGP as the control plane. From the end-user perspective, the aarna.ml GPU CMS provides the ability to define Virtual Private Clouds (VPCs) with multiple subnets, much like a traditional public cloud. Behind the scenes, each VPC is backed by an isolated VRF (Virtual Routing and Forwarding) and each subnet corresponds to a unique VxLAN segment.

This entire abstraction is enabled by NVIDIA Cumulus Linux running on NVIDIA Spectrum-X switches which exposes programmable Linux-based network primitives. Using NVIDIA User Experience’s (NVUE) structured command interface, the GPU CMS dynamically configures VRFs, VXLANs, VLANs, and BGP sessions on the switch fabric. These NVUE commands are executed by the GPU CMS K8s controllers as part of the VPC provisioning flow, allowing the GPU CMS to declaratively build scalable, multi-tenant overlay networks that mirror public cloud semantics.

This abstraction gives users the flexibility to:

- Create isolated environments for different workloads or projects

- Define fine-grained IP subnetting and routing policies

- Attach load balancers or gateways at subnet edges

- Connect VPCs to storage networks or on-prem environments

Under the Hood: How aarna.ml GPU CMS Builds Multi-Tenant AI Network Fabrics

VxLAN and VRF-Based Tenant Segmentation

To support multiple tenants securely and efficiently on the same physical fabric, the aarna.ml GPU CMS uses VxLAN (Virtual Extensible LAN) in combination with VRF (Virtual Routing and Forwarding) constructs. This ensures complete L2/L3 network isolation across tenants. Each tenant’s workloads operate within a dedicated overlay network, backed by a separate VRF instance, enabling traffic segmentation, independent routing policies, and security boundaries, without sacrificing performance.

Storage Network Isolation

The aarna.ml GPU CMS platform implements dedicated VRFs for storage traffic, ensuring that each tenant’s access to storage is separated and performance-guarded. Whether the user is using NFS, NVMe over Fabric, or object storage gateways, the underlying network paths are isolated and tunable per tenant.

In-Band Network Isolation

The aarna.ml GPU CMS supports in-band management and telemetry, while still maintaining tenant isolation. In-band traffic for system management, control plane signaling, and monitoring is handled through logically separate paths within the same Spectrum-X fabric, again using VxLAN/VRF segmentation.

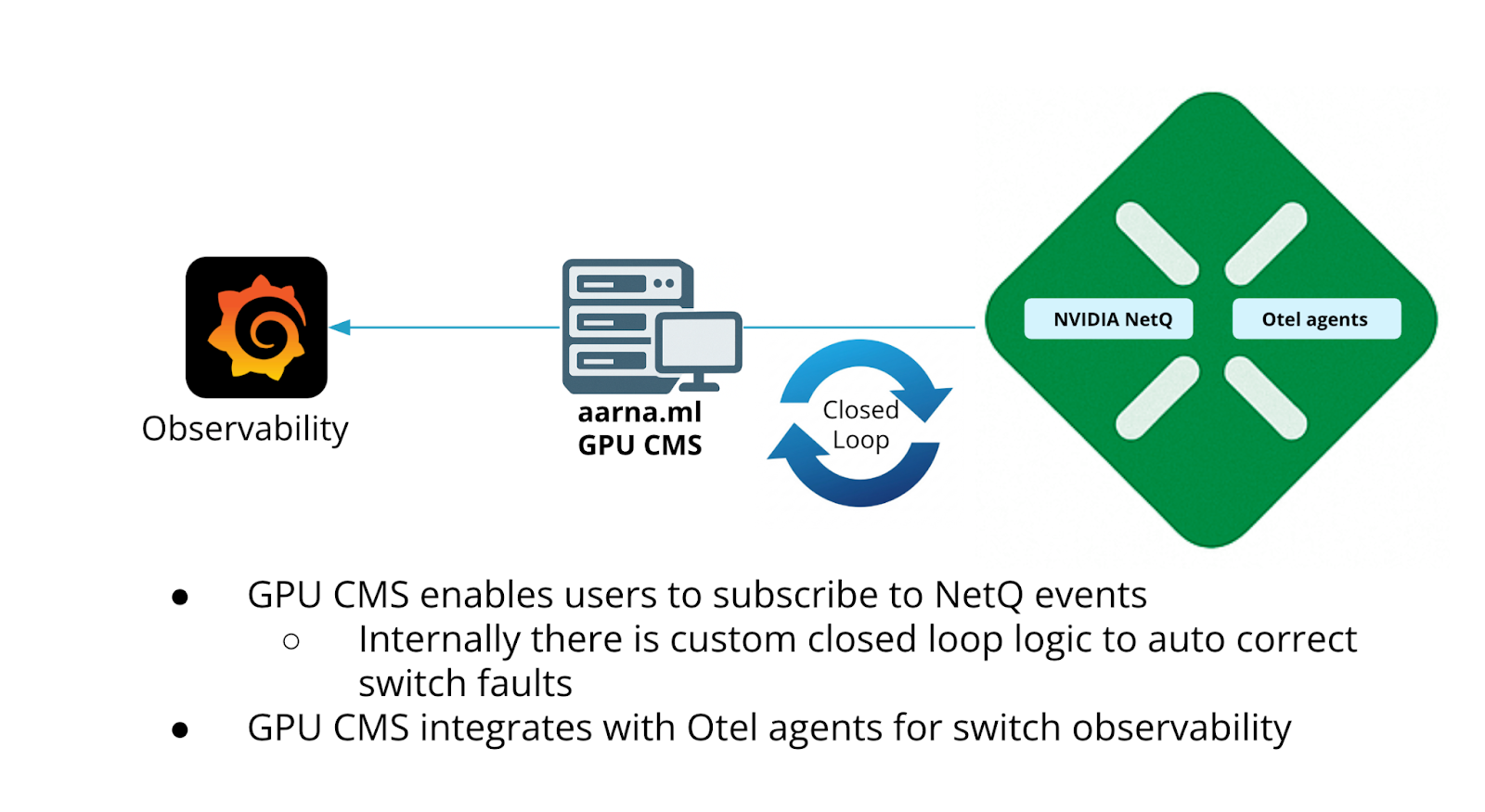

Observability and Fault Management

Cumulus Linux supports OpenTelemetry (OTEL) export, which uses OpenTelemetry Protocol (OTLP) allowing the export of switch metrics and statistics to external collectors.

The aarna.ml GPU CMS supports both NetQ and OTLP based telemetry for NVIDIA Spectrum-X switches. The OTLP integration operates independently of NetQ.

Unified Observability with OTLP

- Switch Telemetry via Cumulus NVUE

- NVIDIA Cumulus Linux on Spectrum-X switches exports telemetry via NVUE commands

- Metrics exposed:

- Buffer occupancy histograms

- Interface-level stats: bandwidth, errors, drops

- Platform metrics: temperature, fan speed, power

- Telemetry is exported in OTEL format, enabling seamless integration into CMS observability pipeline

- SuperNIC Telemetry via DOCA DTS

- DOCA Telemetry Service (DTS) collects real-time SuperNIC metrics using DOCA Telemetry library

- Supports telemetry types:

- High-Frequency Telemetry (HFT)

- Programmable Congestion Control (PCC)

- Ingests metrics from various sources:

- AMBER counters

- Ethtool counters

- Sysfs and more

Infrastructure administrators can subscribe to NetQ events directly from the GPU CMS. When subscribed events are triggered (e.g., link down, config diff), the aarna.ml GPU CMS invokes its own remediation logic to auto-correct faults without manual intervention. This helps customers with dramatic OPEX reduction.

NetQ Integration

NetQ monitors switches and publishes fault events. The CMS subscribes to these events and applies real-time auto-correction flows.

The CMS subscribes to the following fault scenarios from NetQ, and manages the auto-correction for them.

- Switch Failure Detection: Power loss or hardware faults on switches are detected promptly, triggering alerts and automated mitigation workflows.

- Link Failure Detection: Real-time monitoring of physical interfaces allows rapid identification of link failures, port errors, and degradation.

- Configuration Drift Detection: Configuration changes—such as interface resets or unauthorized modifications—are continuously audited to detect and flag drifts from the intended state.

- BGP Session State Monitoring: Changes in BGP session status, including flaps and neighbor disconnects, are actively monitored to maintain routing stability.

Topology Validation

Infrastructure administrators can trigger NetQ topology validation through the aarna.ml GPU CMS platform to verify that the discovered switch fabric aligns with the intended topology design. This ensures consistency between the actual network state and the network intent and can save man-hours to man-days of manual troubleshooting.

NVIDIA Air Integration

The aarna.ml GPU CMS integration with Spectrum-X is extensively validated on NVIDIA Air (data center digital twin), enabling realistic simulation and demonstration of features in a virtual environment.

- Storage Integration: The aarna.ml GPU CMS supports vanilla NFS servers deployed on Ubuntu VMs and is integrated with major storage vendors such as DDN and VAST (with WEKA integration in progress). This functionality can be demonstrated on NVIDIA Air as and when vendor-provided VM images are available.

External Gateway Integration: The border leaf configurations are tested in conjunction with an F5 BIG-IP gateway deployed as a VM on NVIDIA Air to simulate enterprise gateway integration scenarios.

External Connectivity

External connectivity is a key consideration for NCPs and potentially Enterprises, though this aspect is out-of-scope for the Spectrum-X RA. The aarna.ml GPU CMS accomplishes this in conjunction with F5 BIG-IP Virtual Edition.

Border Leaf Configuration

At the edge of each tenant's network, border leaf switches enforce routing policies, external connectivity (e.g., NAT, firewall rules), and traffic shaping. The aarna.ml GPU CMS automatically configures border leaves to bridge the isolated VxLAN/VRF overlays with tenant-owned infrastructure or shared services. This model supports hybrid-cloud extensions.

User Interface

The aarna.ml GPU CMS provides an intuitive UI for infrastructure management, user and workload lifecycle management, and observability. In addition, it offers REST APIs for infrastructure, user, and workload management tasks.

Global Customer Deployments

Our platform is already being used by NCPs in multiple regions along with Spectrum-X. For example, a leading Southeast Asian telecom operator is deploying an AI compute grid for distributed inference. Similarly, a top U.S. NCP is building an AI-and-RAN edge platform; their announcement explicitly notes it “will also support multi-tenancy for multiple use cases or customers”.